Adding a Snowflake Connection

IOTA Vue connects to Snowflake databases using the Generic SQL Driver. This driver comes pre-configured and ready to use. To establish a new Snowflake database connection, follow these steps:

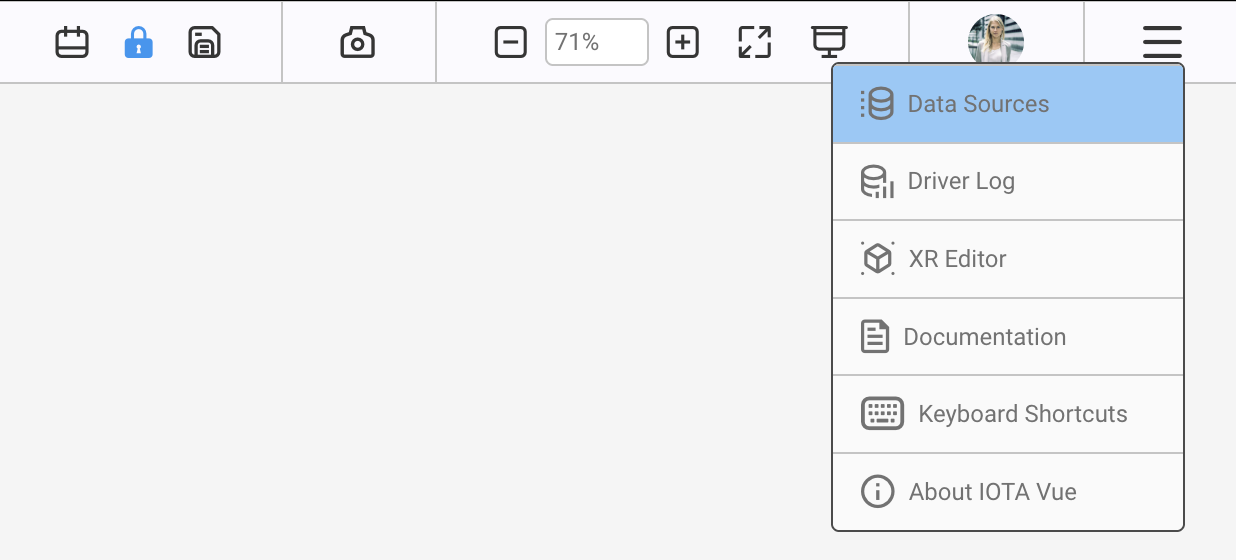

- Click the hamburger icon (☰) in the top-right corner and select Data Sources.

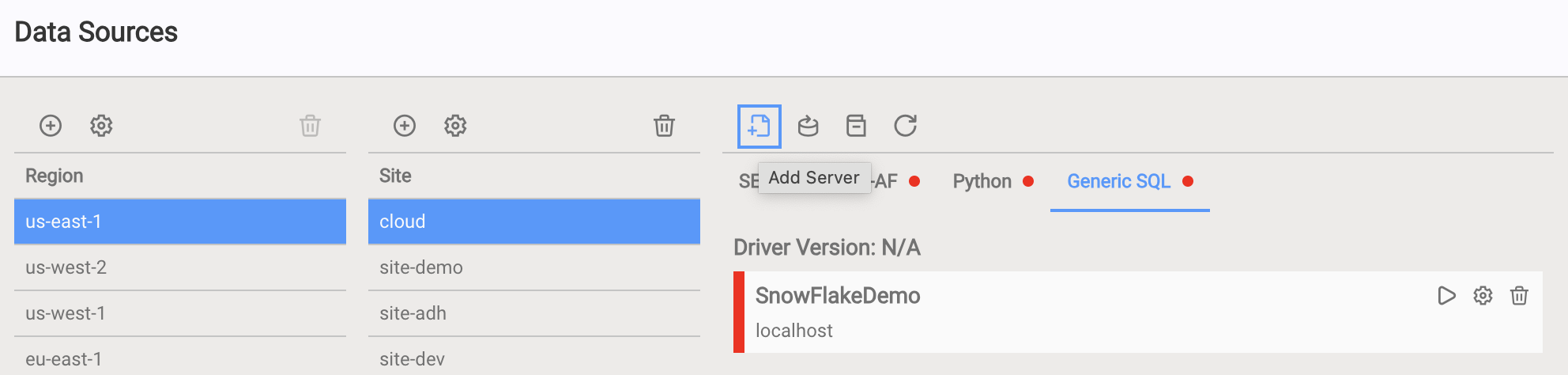

- Select your Region.

- Select your Site.

- Open the GenericSQL tab.

- Click the “Add” button in the top-left corner.

This opens the Snowflake Connection dialog.

About Regions and Sites (expand for details)

IOTA Vue organizes database connections into a flexible, mesh-like topology:

- Region usually represents a geographical area or logical group (e.g.,

us-east-1). - Site typically refers to a specific building, plant, or local deployment (e.g.,

siteB).

The installer already includes pre-configured Regions and Sites for simplicity, but users can create additional ones if needed:

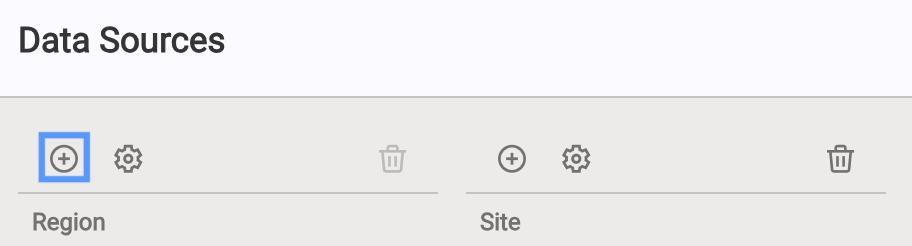

- Add a Region:

Click Add under Regions, provide a name and optional channel abbreviation, and click Apply.

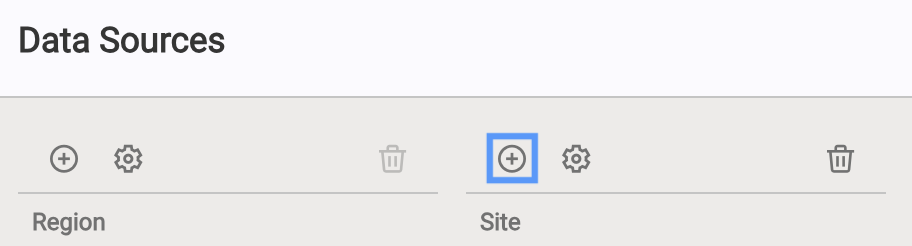

- Add a Site:

Select a Region, click Add under Sites, enter a site name, select GenericSQL as the driver type, and click Apply.

This structure helps organize and manage multiple database connections efficiently.

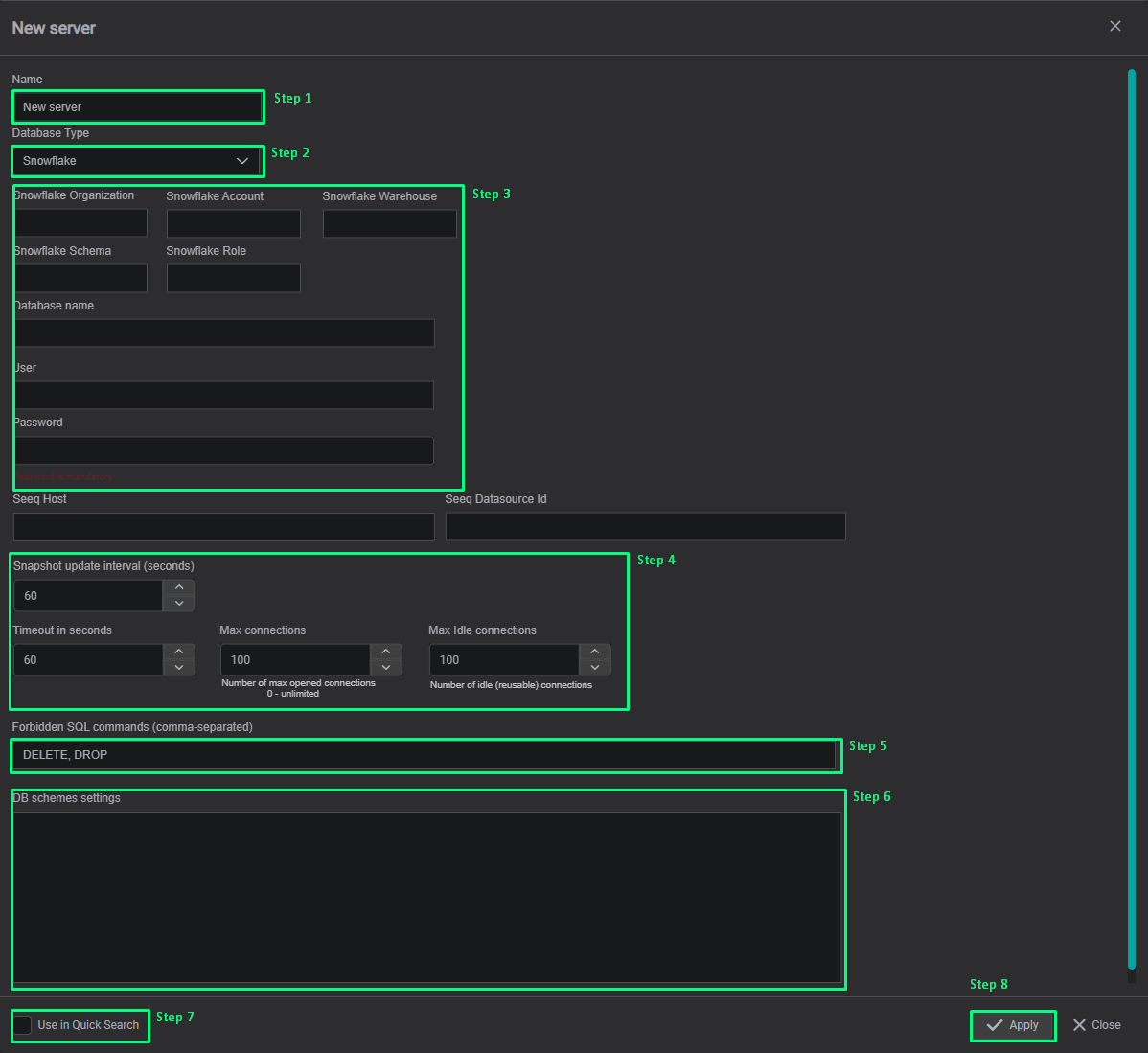

Configuring the Snowflake Connection

The Snowflake connection dialog defines how IOTA Vue connects to your Snowflake database.

Follow these configuration steps:

1. Name Your Connection

Provide a clear, recognizable name for your connection. This name will prefix all search results.

2. Select Database Type

Select Snowflake from the database type dropdown.

3. Enter Connection Information

Fill in the required details to establish your connection:

- Authentication type

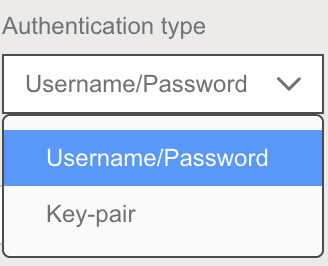

Details Supported Authentication Methods

IOTA Vue supports two authentication methods for connecting to Snowflake:

1. Username & Password Authentication

In order to use your Snowflake username and password, select Username/Password in the Authentication type dropdown:

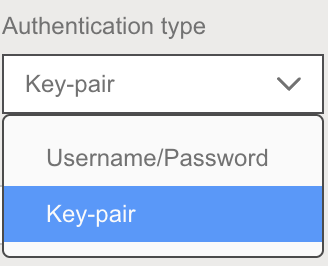

2. Key-Pair (RSA) Authentication

In order to use a key-pair, select Key-pair in the Authentication type dropdown:

To generate a private key and configure access, follow

Snowflake’s official guide.

Notes

When using Key-Pair authentication:

- Paste the entire private key, including the

BEGINandENDlines. - If the private key is encrypted, enter the passphrase in the corresponding field.

- Account details (Organization, Account, Warehouse, Schema, Role)

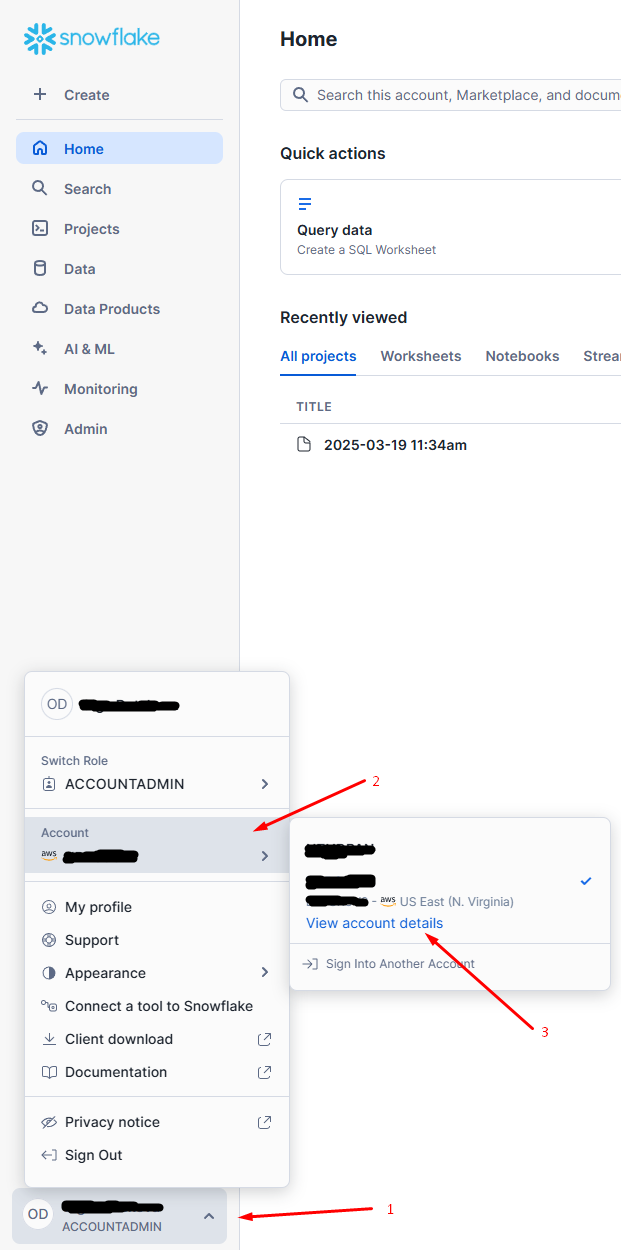

Where to find your Organization, Account, User, and Role

You can find this information in your Snowflake account:

- Click your profile icon in the bottom-left corner of the Snowflake UI.

- Select Account.

- Click View account details.

The details page will show all the required values.

- Credentials based on your selected authentication type

4. (Optional) Define Connection Timeout

Set how long the driver waits for responses from Snowflake before timing out.

5. (Optional) Restrict SQL Commands

Specify any SQL commands that should be blocked for compliance or security purposes.

6. Database Schema Settings

Configure schema-specific options if needed. For details, see Database schema settings.

7. (Optional) Quick Search Availability

Check Use in Quick Search if you want the connection to appear in the quick search sidebar. Otherwise, it will only be available via Advanced Search.

8. Save Connection

Click Apply to save your configuration.

If enabled, your connection immediately becomes available in Quick Search.

Connection Settings Overview

Use the table below as a reference when filling out the connection form.

Some fields depend on the selected authentication method.

| Setting | Required When | Description |

|---|---|---|

| Snowflake Organization | Always | Your Snowflake organization name |

| Snowflake Account | Always | Your assigned Snowflake account name |

| Snowflake Warehouse | Always | Virtual warehouse used to execute queries |

| Snowflake Schema | Always | Target schema within your database |

| Snowflake Role | Always | Role used to access resources |

| Authentication Type | Always | Choose between Username/Password and Key-pair |

| Database Name | Always | The name of the database to connect to |

| User | Always | Your Snowflake username |

| Password | Only for Username/Password | Your Snowflake login password |

| Client Private Key | Only for Key-pair authentication | Paste the full contents of your private key |

| Passphrase | If your private key is encrypted | Password protecting your private key |

Driver Configuration Details

The Snowflake Driver container automatically retrieves its configuration settings from environment variables at startup.

Tips

The Snowflake Driver container provided by IOTA Vue comes fully pre-configured, requiring no manual configuration after deployment.

Configuration parameters

| Environment name | Required | Default Value | Data Type | Description |

|---|---|---|---|---|

| DRIVERID | ⚫ | "" | string | Driver Id - used for logging purposes only |

| LOG_LEVEL | ⚫ | ERROR | string | Debug verbose level: ERROR, DEBUG, INFO |

| NATS_HOST | 🟢 | null | string | NATs server url with port |

| SEED_KEY_PATH | 🟢 | null | string | NATs NKey public key string. Private key must be defined in file: "privatekey.nk" |

| RQS_TOPIC | 🟢 | null | string | NATs topic on which driver listens for requests |

| HEALTH_TOPIC | ⚫ | "" | string | NATs topic for sending driver's health |

| HEALTH_SCHEDULING | ⚫ | 10000 | int | How often in milliseconds to send health data. Default: 10000 = 10 sec |

| LOGS_TOPIC | ⚫ | "" | string | NATs topic for sending driver's logs |

| METRICS_TOPIC | ⚫ | "" | string | NATs topic for sending driver's metrics |

| METRICS_SCHEDULING | ⚫ | 30000 | int | How often in milliseconds to send metrics data. Default: 30000 = 30 sec |

| ENABLE_METRICS | ⚫ | true | bool | Enables metrics reporting |

Database schema settings

This section provides instructions on how to configure the generic driver for data ingestion from SQL-like databases.

Settings are the JSON object described bellow

{

"tableSettings": [

{

"Id": string,

"Name": string,

"TimeColumn": string,

"TimeUnit": string,

"TimeStringFormat": string,

"DataColumns": []string,

"GroupBy": []string,

"LastGroupAsTagName": bool,

"IntervalForSnapshotsInMin": integer,

"TagPropertiesMap": JSON,

"SchemaName": string,

"MetadataTable": JSON

}

],

"datasetTags": [

{

"name": string,

"query": string,

"parameters": JSON

}

],

"writeDataTags": [

{

"name": string,

"query": string,

"parameters": JSON

}

]

}tableSettings

This section describes the DB tables structure.

- Id - (required) table settings identifier

- Name - (required) table name

- SchemaName - name of the schema stores table

- TimeColumn - (required) name of the column stores timestamp or datetime string

- TimeUnit - units of the time

- TimeStringFormat - format of the datetime string. Used when the TimeColumn stores datetime as string. The format is described here Golang datetime string format

- DataColumns - (required) list of the columns names stores tag values

- GroupBy - list of the columns names stores asset tree. Used with Asset-Like, Multi tagged Asset-like and Metadata-Value tables

- LastGroupAsTagName - (required) flag represents is last element in the GroupBy list is tag name column

- TagPropertiesMap - map of the key-value pairs where key is one of ["Uom", "Description"] and value is column name related to key tag property

- Uom - units of the tag measurement

- Description - tag description

- IntervalForSnapshotsInMin - minutes to narrow scanning a table, it is used for tabular data, if you know that your columns is updated every day, you can specify it in this property, and the driver will build a query like give me a snapshot in this range: now()-interval. Instead of give me snapshot order by time DESC, which scans the whole table. This option will significantly improve the speed of the query.

datasetTags

This section describes the custom queries to DB. This queries will be displayed in tag search.

- name - unique query name

- query - query string. May contains parameters placeholders

- parameters - map of the key-value pairs where key is the name of query parameter and value is the parameter value

See detailed description of the configuration.

writeDataTags

This section describes the custom INSERT|UPDATE|DELETE queries to DB.

- name - unique query name will be displayed in the Tag search panel.

- query - query string. May contains parameters placeholders

- parameters - map of the key-value pairs where key is the name of query parameter and value is the parameter value

See detailed description of the configuration.

Reading Data from Tabular Tables

In statistics, tabular data refers to data organized in a table format with rows and columns. Consider the following example of tabular data:

| ID | compressor_power | optimizer | relative_humidity | temperature | wet_bulb | ts |

|---|---|---|---|---|---|---|

| 0 | 0.0029228 | 0 | 62.35181596 | 79.64134287 | 70.29920291 | 2022-11-03 12:19 |

| 1 | 0.0029228 | 0 | 62.38810345 | 79.63862069 | 70.30710957 | 2022-11-03 12:20 |

| 2 | 0.0029228 | 0 | 62.4371127 | 79.63413962 | 70.31706517 | 2022-11-03 12:21 |

| 3 | 0.0029228 | 0 | 62.48612195 | 79.62965854 | 70.32702076 | 2022-11-03 12:22 |

Each column in this table represents a TAG. To configure the driver to read this data, use the following configuration:

{

"Id": "tabular_data",

"Name": "tabular_data",

"TimeColumn": "ts",

"DataColumns": [

"compressor_power",

"optimizer",

"relative_humidity",

"temperature",

"wet_bulb"

],

"GroupBy": null,

"LastGroupAsTagName": false

}Configuration Details:

| Config Item | Description |

|---|---|

| Id | Unique ID used internally by the driver |

| Name | Name of the database's table |

| TimeColumn | Specifies the column containing the time series |

| DataColumns | Array of columns to convert into tags |

| GroupBy | Should be empty or null for tabular data |

| LastGroupAsTagName | Should be false for tabular data |

This configuration allows the driver to effectively handle and read tabular data from SQL-like databases.

Reading Data from Asset-like Tables

Additionally, data can be stored in the following table format:

| ID | ts | siteid | machineid | lineid | tag | value |

|---|---|---|---|---|---|---|

| 1 | '2022-11-03 21:27:47' | Site 1 | MachineA | Line 1 | Temperature | -47.43 |

| 2 | '2022-11-03 21:27:47' | Site 1 | MachineA | Line 1 | Pressure | 25.50 |

| 3 | '2022-11-03 21:27:47' | Site 1 | MachineA | Line 1 | Power | -14.03 |

| 4 | '2022-11-03 21:27:47' | Site 1 | MachineB | Line 1 | Temperature | 79.61 |

| 5 | '2022-11-03 21:27:47' | Site 1 | MachineB | Line 1 | Pressure | -27.63 |

| 6 | '2022-11-03 21:27:47' | Site 1 | MachineB | Line 1 | Power | 99.65 |

| 7 | '2022-11-03 21:27:47' | Site 1 | MachineC | Line 1 | Temperature | 32.08 |

| 8 | '2022-11-03 21:27:47' | Site 1 | MachineC | Line 1 | Pressure | 79.92 |

| 9 | '2022-11-03 21:27:47' | Site 1 | MachineC | Line 1 | Power | 162.36 |

| 10 | '2022-11-03 21:27:47' | Site 2 | MachineA | Line 1 | Temperature | 91.21 |

| 11 | '2022-11-03 21:28:47' | Site 2 | MachineB | Power | 38.12 | |

| 12 | '2022-11-03 21:41:47' | Site 1 | MachineB | Pressure | -27.70 |

This table structure can be represented as an asset tree:

- Site1

- Line 1

- MachineA

- Temperature

- Pressure

- Power

- MachineB

- Temperature

- Pressure

- Power

- MachineC

- Temperature

- Pressure

- Power

- MachineA

- Line 1

- Site2

- Line 1

- MachineA

- Temperature

- MachineB

- Power

- MachineA

- Line 1

The configuration for processing such table data is as follows:

{

"ID": "asset_tree_data",

"Name": "asset_tree_data",

"TimeColumn": "ts",

"DataColumns": ["value"],

"GroupBy": ["siteid", "lineid", "machineid", "tag"],

"LastGroupAsTagName": true

}Configuration Details:

| Config Item | Description |

|---|---|

| Id | Unique ID used internally by the driver |

| Name | Name of the database's table |

| TimeColumn | Specifies the column containing the time series |

| DataColumns | Array of columns to convert into tags |

| GroupBy | An array that defines the hierarchy for creating the asset tree structure |

| LastGroupAsTagName | Should be true, as we want to show the 'value' column as the value of the last tag in the hierarchy |

Reading Data from Multi tagged Asset-like tables

Data can be stored in the following table format:

| ts | siteid | machineid | lineid | Temperature | Pressure |

|---|---|---|---|---|---|

| '2022-11-03 21:27:47' | Site 1 | MachineA | Line 1 | 25.50 | -47.43 |

| '2022-11-03 21:27:47' | Site 1 | MachineB | Line 1 | 34.0 | 79.61 |

| '2022-11-03 21:27:47' | Site 2 | MachineA | Line 1 | 45.76 | 91.21 |

The peculiarity of this type of tables is that each record has the data of the asset tree and the values of all its tags, the names of which are the names of the corresponding columns of the table.

This table structure can be represented as an asset tree:

- Site1

- Line 1

- MachineA

- Temperature

- Pressure

- MachineB

- Temperature

- Pressure

- MachineC

- Temperature

- Pressure

- MachineA

- Line 1

- Site2

- Line 1

- MachineA

- Temperature

- Pressure

- MachineA

- Line 1

The configuration for processing such table data is as follows:

{

"ID": "hybrid_asset_tree",

"Name": "hybrid_asset_tree",

"TimeColumn": "ts",

"DataColumns": ["Temperature", "Pressure"],

"GroupBy": ["siteid", "lineid", "machineid"],

"LastGroupAsTagName": false

}Configuration Details:

| Config Item | Description |

|---|---|

| Id | Unique ID used internally by the driver |

| Name | Name of the database's table |

| TimeColumn | Specifies the column containing the time series |

| DataColumns | Array of columns to convert into tags |

| GroupBy | An array that defines the hierarchy for creating the asset tree structure |

| LastGroupAsTagName | Should be false, as we want to show the 'DataColumns' list columns as the tags in the hierarchy |

Reading data From Metadata and Value Data

Sometimes data is stored in a dimension table and a fact table. This is a popular model choice because it allows flexibility in the metadata (the dimension table) while allowing the value table (the fact table) to grow.

Metadata (Dimension Table)

| siteId | lineId | machineId | tag | tagId | unit | interpolation | valuetype |

|---|---|---|---|---|---|---|---|

| Site 1 | MachineA | Line 1 | Temperature | Site 1.Line 1.MachineA.Temperature123 | s | linear | numeric |

| Site 1 | MachineA | Line 1 | Pressure | Site 1.Line 1.MachineA.Pressure123 | megadonut/coffee | linear | numeric |

| Site 1 | MachineA | Line 1 | Power | Site 1.Line 1.MachineA.Power123 | ft/s | linear | numeric |

| Site 1 | MachineA | Line 1 | State_s | Site 1.Line 1.MachineA.State_s123 | step | linear | string |

| Site 1 | MachineA | Line 2 | Temperature | Site 1.Line 2.MachineA.Temperature123 | degC | linear | numeric |

Data (Fact Table)

| TS | tagId | valuetype | Value_S | Value_D |

|---|---|---|---|---|

| 2022-11-04T20:17:49 | Site 1.Line 1.MachineA.Temperature123 | numeric | 36.76654 | |

| 2022-11-04T20:17:49 | Site 1.Line 1.MachineA.Pressure123 | numeric | -214.179 | |

| 2022-11-04T20:17:49 | Site 1.Line 1.MachineA.Power123 | numeric | -33.6876 | |

| 2022-11-04T20:17:49 | Site 1.Line 1.MachineA.State_s123 | string | strval | |

| 2022-11-04T20:17:49 | Site 1.Line 2.MachineA.Temperature123 | numeric | 41.31567 | |

| 2022-11-04T20:17:49 | Site 1.Line 2.MachineA.Pressure123 | numeric | -87.6572 | |

| 2022-11-04T20:17:49 | Site 1.Line 2.MachineA.Power123 | numeric | 14.04372 | |

| 2022-11-04T20:17:49 | Site 1.Line 2.MachineA.State_s123 | string | strval |

The configuration for processing data from both tables is as follows:

{

"Id": "DataAndMetadata",

"Name": "Data",

"SchemaName": "",

"TimeColumn": "TS",

"DataColumns": [

"Value_D",

"Value_S"

],

"GroupBy": [

"siteId",

"lineId",

"machineId",

"tag"

],

"LastGroupAsTagName": true,

"ColumnId": "tagId",

"MetadataTable": {

"Name": "Metadata",

"SchemaName": "",

"UniqueKeyColumnName": "tagId",

"ValueTypeColumnName": "valuetype",

"DataColumnsTypes": {

"Value_S": "string",

"Value_D": "numeric"

}

}

}Configuration Details:

| Config Item | Description |

|---|---|

| MetadataTable | A section related to mapping metadata with actual data |

| Name | Name of a table with metadata |

| SchemaName | Name of DB schema which contains a table |

| UniqueKeyColumnName | The name of the column that stores the unique tag key |

| ValueTypeColumnName | The name of the column where the tag data type is stored |

| DataColumnsTypes | A map in which the key is the column name of the data table, and the value is one of the types listed in the column of the metadata table, which is specified in the "ValueTypeColumnName" field |

Virtual Tags (Datasets)

There are two options for executing custom SQL queries:

Using a Query tag from Tags and Writing SQL Query Manually: This involves using the "Query from Tags" feature to manually write your SQL query.

Using Virtual Tags with Hardcoded SQL: This option involves utilizing virtual tags that contain pre-defined SQL queries.

For example, let's define a virtual tag in the configuration, named "DST2," with the following settings:

{

"name": "dst2",

"query": "SELECT * FROM tabular_data WHERE temperature > {{temp}} LIMIT 10",

"parameters": {

"temp": 10.0

}

}| Config Item | Description |

|---|---|

| Name | The name of the virtual tag. |

| Query | The SQL query that will be executed. It can contain specific arguments. |

| Parameters | A map of parameters that can be injected into the query. If this map is empty, the argument will be injected from the UI settings. |

Write data Tags

This type of tags allow to user to execute custom INSERT|UPDATE|DELETE queries to DB.

This type of tags has the following configuration:

{

"name": "addRecord",

"query": "INSERT INTO tabular_data (tsCol, valueCol) VALUES ({{ts}}, {{temp}})",

"parameters": {

"timeColumn": "ts",

"ts": 0,

"temp": 10.0

}

}| Config Item | Description |

|---|---|

| Name | The name of the virtual tag. |

| Query | The SQL query that will be executed. It can contain specific arguments. |

| Parameters | A map of parameters that can be injected into the query. The parameters must contain the 'timeColumn' property, whose value is the name of the placeholder to which the timestamp value will be passed. |

By default, the time column format is "2006-01-02T15:04:05Z07:00. If you need to change the time format, just add the "timeFormat" property with the format you need to the Parameters`. How to make the time format string is described here.

For example, date format in Oracle DB is configured as 'YYYY.MM.DD HH24:MI:SS', so the write data Tag will look like this:

{

"name": "addRecord",

"query": "INSERT INTO tabular_data (tsCol, valueCol) VALUES ({{ts}}, {{temp}})",

"parameters": {

"timeColumn": "ts",

"timeFormat": "2006.01.02 15:04:05",

"ts": 0,

"temp": 10.0

}

}Write data tags usage example

In this example, we will create a button, when clicked, the write data tag will execute the query configured in it.

- Define write data Tag configuring it in the driver's DB schema settings.

For example, let's define write data tag with query that inserts some value and a timestamp into a 'tabular_data' table with columns 'tsCol' and 'valueCol'.

{

"writeDataTags": [

{

"name": "Write_Value",

"query": "INSERT INTO tabular_data (tsCol, valueCol) VALUES ({{ts}}, {{value}})",

"parameters": {

"timeColumn": "ts",

"ts": 0,

"value": 0

}

}

]

}- Write a script that sends the 'writedata' request to the driver when the button is pressed.

IMPORTANT! The value of the time column in the request sended from UI must be set in milliseconds, i.e. you need to call

valueOf()method of the Date class.let val = Math.random() * 100; let writeData = async (val) => { let req = { "ts": new Date().valueOf(), "value": val }; let resList = callDriver({}, 'writedata', req, [this.channels[0].fqn]); } await writeData(val);

- Search tag 'Write_Value' and drop it on the Button.

That's all. Now, by clicking on our button, you can send a request to insert data.

Configuration example

Configure driver to connect to the database with tabular table tabular_data and asset-tree table asset_tree_data. Additionally define virtual tag to receive temperature above 10 degrees by default.

{

"tableSettings": [

{

"Id": "tabular_data",

"Name": "tabular_data",

"TimeColumn": "ts",

"TimeUnit": "",

"DataColumns": [

"compressor_power",

"optimizer",

"relative_humidity",

"temperature",

"wet_bulb"

],

"GroupBy": null,

"LastGroupAsTagName": false

},

{

"ID": "asset_tree_data",

"Name": "asset_tree_data",

"TimeColumn": "ts",

"DataColumns": [

"value"

],

"GroupBy": [

"siteid",

"machineid",

"lineid",

"tag"

],

"LastGroupAsTagName": true,

"TagProperties": {

"uom": "units"

}

}

],

"datasetTags": [

{

"name": "GetTemperatureAbove10degrees",

"query": "SELECT * FROM tabular_data WHERE temperature > {{temp}} LIMIT 10",

"parameters": {

"temp": 10.0

}

}

]

}Golang datetime string format

| Unit | Golang Layout | Examples | Note |

|---|---|---|---|

| Year | 06 | 21, 81, 01 | |

| Year | 2006 | 2021, 1981, 0001 | |

| Month | January | January, February, December | |

| Month | Jan | Jan, Feb, Dec | |

| Month | 1 | 1, 2, 12 | |

| Month | 01 | 01, 02, 12 | |

| Day | Monday | Monday, Wednesday, Sunday | |

| Day | Mon | Mon, Wed, Sun | |

| Day | 2 | 1, 2, 11, 31 | |

| Day | 02 | 01, 02, 11, 31 | zero padded day of the month |

| Day | _2 | ⎵1, ⎵2, 11, 31 | space padded day of the month |

| Day | 002 | 001, 002, 011, 031, 145, 365 | zero padded day of the year |

| Day | __2 | ⎵⎵1, ⎵⎵2, ⎵11, ⎵31, 365, 366 | space padded day of the year |

| Part of day | PM | AM, PM | |

| Part of day | pm | am, pm | |

| Hour 24h | 15 | 00, 01, 12, 23 | |

| Hour 12h | 3 | 1, 2, 12 | |

| Hour 12h | 03 | 01, 02, 12 | |

| Minute | 4 | 0, 4 ,10, 35 | |

| Minute | 04 | 00, 04 ,10, 35 | |

| Second | 5 | 0, 5, 25 | |

| Second | 05 | 00, 05, 25 | |

| 10-1 to 10-9 s | .0 .000000000 | .1, .199000000 | Trailing zeros included |

| 10-1 to 10-9 s | .9 .999999999 | .1, .199 | Trailing zeros omitted |

| Time zone | MST | UTC, MST, CET | |

| Time zone | Z07 | Z, +08, -05 Z is for UTC | |

| Time zone | Z0700 | Z, +0800, -0500 Z is for UTC | |

| Time zone | Z070000 | Z, +080000, -050000 Z is for UTC | |

| Time zone | Z07:00 | Z, +08:00, -05:00 Z is for UTC | |

| Time zone | Z07:00:00 | Z, +08:00:00, -05:00:00 Z is for UTC | |

| Time zone | -07 | +00, +08, -05 | |

| Time zone | -0700 | +0000, +0800, -0500 | |

| Time zone | -070000 | +000000, +080000, -050000 | |

| Time zone | -07:00 | +00:00, +08:00, -05:00 | |

| Time zone | -07:00:00 | +00:00:00, +08:00:00, -05:00:00 |